Picture a world where your smart devices can process data instantly, right at the edge of the network, without relying solely on distant cloud servers. Welcome to the realm of fog computing—a game-changer in the tech landscape.

But what exactly is fog computing, and why is it becoming a cornerstone of modern technology? In this article, we will demystify fog computing, exploring its definition, real-world use cases, and the ten essential components that make it tick.

Whether you’re a tech enthusiast, a business leader seeking cutting-edge solutions, or simply curious about the next big thing in computing, join us as we dive into the nuances of this transformative technology.

Prepare to discover how fog computing brings speed, efficiency, and intelligence closer to the edge, revolutionizing the way data is processed and utilized.

Key Takeaways

- Fog computing extends cloud capabilities to the edge of the network, reducing latency by processing data closer to its source.

- Unlike traditional cloud computing, fog computing disperses computing resources nearer to the edge, enabling local processing and reducing reliance on distant servers.

- Fog computing enhances efficiency, reliability, and data privacy by minimizing data transmission to centralized cloud servers.

- It facilitates real-time data processing and decision-making, making it ideal for applications requiring low latency and high responsiveness.

- Fog computing is essential for industries like smart cities, healthcare, and transportation, where immediate data analysis and local processing are critical for operational efficiency.

Table of Contents

What is Fog Computing?

Fog computing, also called fogging or fog networking, is a decentralized computing infrastructure leveraging edge devices to perform significant computation, storage, and communication tasks locally rather than relying solely on centralized cloud servers.

Instead of relying on a distant cloud, fog computing disperses computing and storage resources nearer to the edge of the network. This allows devices to perform local processing, which reduces the need for sending large quantities of data over great distances to central data centers.

This approach brings compute and storage resources closer to the data source as well as the cloud, so data processing is done near where it is created. Fog computing, like edge computing, brings the benefits of cloud computing closer to the data origin to ensure efficiency and responsiveness.

The term “fog” is derived from meteorology, referring to a cloud near the ground, similar to how fog computing concentrates intelligence at the edge of the network. Originally attached to Cisco, the concept is now open to everyone.

Fog computing brings cloud capabilities to the edge of the network and provides the capacity for computing, storage, and networking services. It optimizes performance, especially in real-time data processing with low latency.

How Does Fog Computing Work?

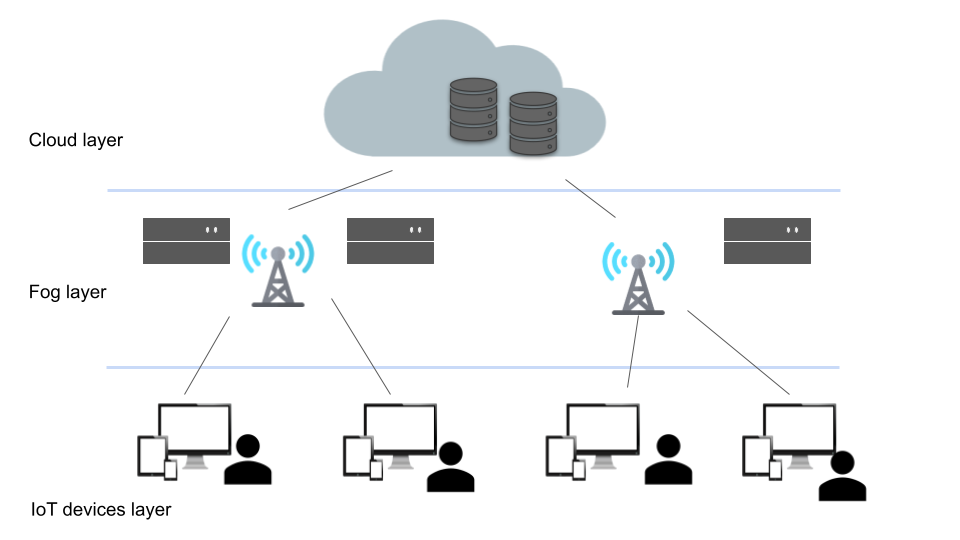

Fog computing operates by extending cloud capabilities to the network edge, positioning computation and storage resources closer to where data is generated. This decentralization facilitates immediate data processing and reduces reliance on distant cloud servers.

Fog nodes are at the network edge, processing the data coming from IoT devices, which makes the network operate faster, with less traffic going from the edge to centralized data centers. This makes for improving the network efficiency and responsiveness, particularly for applications that require real-time analytics.

Fog computing is a model for complementary cloud computing. It offers short-term analytics at the edge while the cloud performs resource-intensive, longer-term analysis. The hybrid model makes the most of the resources used for processing and reacting while balancing between edge devices and centralized cloud servers.

Fog computing reduces the data transmission from the edge to distant cloud centers. It helps to address many concerns related to privacy and security, including regulatory issues related to sending raw data over the Internet. It finds applications in various sectors, such as smart grids, cities, buildings, and vehicle networks, to improve operational efficiency and data security.

How and Why is Fog Computing Used?

Fog computing has emerged as a key player in edge computing. It’s increasingly vital in industries that demand processing power closer to data sources. By adopting fog computing, organizations boost their network connectivity. They offload tasks to nearby computation units. This enhances rapid data processing capabilities.

Fog computing excels by enhancing cloud services. Unlike cloud computing, which depends on remote servers, fog computing reduces bandwidth consumption and latency. It performs vital data analysis and preliminary processing close to the data origin. This approach preserves bandwidth. It also lessens data loss risks during long-distance transmissions.

- Edge Computing Enhancement: Fog computing shifts processing nearer to data sources, easing the burden on central servers.

- Efficiency in Data Processing: By managing data locally, fog computing quickens response times and decision-making.

- Reduction in Bandwidth Usage: Local processing notably decreases data traversing the network, thus conserving bandwidth.

- Seamless Network Connectivity: Processing decentralization maintains network reliability by preventing centralized bottlenecks.

| Aspect | Cloud Computing Contribution | Fog Computing Enhancement |

|---|---|---|

| Data Storage and Access | Remote, centralized servers | Local nodes for immediate access |

| Processing Latency | Increased due to distance | Decreased, thanks to proximity to sources |

| Bandwidth Requirements | High for cloud data sending | Less, due to local data handling |

| Scalability | Constrained by central setups | Boosted by distributed nodes |

Use Cases and Applications of Fog Computing

Fog computing is transforming how data is managed and processed across various sectors. It’s enhancing the efficiency of smart cities, healthcare, and transportation. This innovation enables a more interconnected and effective future.

| Application Domain | Function Enabled by Fog Computing | Benefits |

|---|---|---|

| Smart Cities | Real-time traffic analysis and crowd monitoring | Enhanced public safety and traffic flow optimization |

| Healthcare | Remote patient monitoring and data analysis | Improved patient care and efficient resource allocation |

| IIoT/Manufacturing | Predictive analytics for equipment maintenance | Reduced downtime and operational cost savings |

| Transportation | Data exchange for ITS and autonomous vehicles | Increased transportation safety and efficiency |

Smart Cities and Urban Infrastructure Management

In smart cities, fog computing significantly improves urban infrastructure management. Fog nodes, installed near traffic signals and surveillance cameras, enhance traffic control and public safety. They allow for immediate adjustments in traffic and more precise monitoring. This results in safer, more efficient city environments.

Healthcare and Telemedicine

The advent of fog computing has marked a new era in telemedicine and healthcare. It’s making remote patient monitoring more efficient, allowing for prompt care delivery. Through fog computing, patient data is handled more quickly and securely, improving outcomes and healthcare services.

Industrial Internet of Things (IIoT) and Manufacturing

The Industrial Internet Consortium highlights fog computing’s impact on IIoT and manufacturing. It enables devices to perform predictive maintenance, reducing downtime and lengthening equipment lifespan. Fog computing enhances decision-making and operational efficiency by processing data onsite.

Autonomous Vehicles and Intelligent Transportation Systems (ITS)

Autonomous vehicles are revolutionizing transportation, with fog computing playing a crucial role. It ensures rapid data processing between vehicles and infrastructure, promoting safer travel. Fog computing maintains low latency and high reliability as vehicles share essential data swiftly.

Advantages and Disadvantages of Fog Computing

Certainly! Here are the advantages and disadvantages of fog computing presented in a table format:

| Advantages of Fog Computing | Disadvantages of Fog Computing |

|---|---|

| Lower Latency | Security Concerns |

| Reduced Network Traffic | Limited Scalability |

| Enhanced Data Privacy | Potential Data Congestion |

| Improved Reliability | Dependency on Edge Devices |

| Offline Operation Support | Management Complexity |

10 Basic Components of Fog Computing

Stepping into fog computing reveals its essential components, which are vital for network efficiency, data storage strength, and computational power maximization. This technology capitalizes on edge nodes, bringing resources closer to data origination. This enhances network and gateway performance.

Here’s a detailed guide to the core elements of an effective fog system.

| Component | Function | Relevance in Fog Computing |

|---|---|---|

| Fog Nodes | Initial Data Processing | Reduces latency by processing data close to the source |

| Fog Computing Infrastructure | Distributed Hardware and Software Resources | Forms the decentralized backbone for data handling |

| Fog Services | Application Services and APIs | Empowers devices and systems to utilize fog resources |

| Fog Orchestrator | Resource Allocation and Management | Manages computing resources for optimal performance |

| Data Processors | Data Analysis and Transformation | Essential for immediate insights at the edge of the network |

| Resource Manager | Scalable Resource Distribution | Balances system load and manages resources efficiently |

| Security Tools | Data Protection and Threat Mitigation | Secures network and data integrity against intrusions |

| Networking Infrastructure | Communication Channels | Facilitates reliable data flow between fog components |

| Monitoring and Management Tools | Performance Oversight | Enables constant system surveillance and control |

| Integration Interfaces | System Interconnectivity | Connects fog computing with other networks and services |

1. Fog Nodes

A fog node is a computing device deployed at the edge of the network, closer to the data sources and end users. Fog nodes serve as the primary processing units in fog computing architecture, enabling data processing, storage, and communication.

2. Fog Computing Infrastructure

The infrastructure includes hardware components such as servers, routers, switches, and gateways deployed in the fog computing environment. These components provide the necessary computing, networking, and storage resources to support fog computing applications.

3. Fog Services

Fog services are software applications and middleware components deployed on fog nodes to provide specific functionalities such as data analytics, real-time processing, security, and communication. These services enable the execution of fog computing tasks and workflows.

4. Fog Orchestrator

The fog orchestrator is responsible for managing and coordinating the operation of fog nodes and services within the fog computing environment. It allocates resources, schedules tasks, and ensures the efficient execution of fog computing workflows.

5. Data Processors

Data processors are software components deployed on fog nodes to process and analyze data generated by edge devices and sensors. These processors perform tasks such as data filtering, aggregation, transformation, and analytics to extract actionable insights from raw data.

6. Resource Manager

The resource manager monitors and manages the computing, storage, and networking resources available on fog nodes. It optimizes resource utilization, allocates resources based on workload demands, and ensures efficient resource provisioning to support fog computing applications.

7. Security Tools

Security tools are deployed on fog nodes to ensure the security and integrity of data and applications in the fog computing environment. These tools include firewalls, intrusion detection systems, encryption mechanisms, and access control mechanisms to protect against cyber threats and unauthorized access.

8. Networking Infrastructure

Networking infrastructure components such as routers, switches, and wireless access points provide connectivity between fog nodes, end devices, and cloud resources. They enable data transmission, communication, and collaboration within the fog computing environment.

9. Monitoring and Management Tools

Monitoring and management tools are used to monitor the performance, health, and status of fog nodes, services, and applications. These tools provide visibility into resource utilization, network traffic, and application performance, enabling administrators to identify and troubleshoot issues proactively.

10. Integration Interfaces

Integration interfaces facilitate communication and interoperability between fog computing components, edge devices, and cloud systems. These interfaces support standard protocols and APIs for data exchange, service invocation, and integration with existing IT infrastructure.

History of Fog Computing

| Evolutionary Phase | Key Developments | Contributing Factors |

|---|---|---|

| Distributed Computing Era | Foundation of computation across networked devices | Advancements in network technology |

| Cloud Computing Emergence | Centralization of storage and computation in data centers | Increased internet speed and data generation |

| Edge Computing Influence | Introduction of processing at network’s edge | Need for reduced latency in data processing |

| Conceptualization of Fog Computing | Cisco Systems’ advocacy for distributed intelligence | IoT device proliferation and its demands on infrastructure |

Fog computing thus began with the application of distributed computing. The idea was computing over a network of devices, which was often geographically separate, but this was just the beginning of fog computing.

As cloud computing began to take shape, data flow to centralized data centers was high, thanks to massive capacity servers. However, these servers became overloaded with the growing volume of data coming from countless devices and prompted a change in architecture.

Cloud computing had problems, specifically bottlenecks in centralized data centers. This was due to the continually increasing volume of data coming from multiple devices, which flooded the system. Edge computing was introduced to combat the issue, bringing computational power closer to the source of data at the network’s edge. This allowed for faster data processing and faster response, which is the need for real-time operation.

Cisco Systems was a key player in the evolution of the model toward fog computing. They introduced a model that integrated cloud computing and edge computing in a single architecture. It was a distributed architecture that transferred intelligence and computational power across the network. This led to the introduction of fog computing debates, which revealed a move toward decentralized computing. It laid the foundation of the distributed model that today is the hallmark of fog computing.

The inception of fog computing was a key achievement for Cisco Systems, influencing networking technology.

- Fog computing emerged to meet the demands of an expanding IoT device landscape.

- It introduces enhanced responsiveness and reliability, catering to real-time processing needs.

Fog Computing vs. Cloud Computing

Here’s a comparison between fog computing and cloud computing presented in a table format:

| Aspect | Fog Computing | Cloud Computing |

|---|---|---|

| Location | Processing occurs at or near the edge devices | Processing occurs in centralized data centers |

| Latency | Low latency due to proximity to data source | Higher latency due to data transfer to remote servers |

| Scalability | Limited scalability due to edge device constraints | Highly scalable with the ability to add more resources |

| Data Privacy | Enhanced data privacy as data stays on-premise | Data privacy concerns due to data being stored remotely |

| Reliability | Improved reliability as data processing is local | Reliability can be affected by network and server issues |

| Offline Operation | Supports offline operation in case of network loss | Requires continuous internet connectivity for access |

Fog Computing vs. Edge Computing

Here’s a comparison between fog computing and edge computing presented in a table format:

| Aspect | Fog Computing | Edge Computing |

|---|---|---|

| Location | Processing occurs closer to the data source | Processing occurs at the edge of the network |

| Network Dependence | Requires connectivity to the cloud for some tasks | Can operate independently with local processing |

| Latency | Offers lower latency than cloud computing | Provides ultra-low latency for time-sensitive tasks |

| Scalability | Scalability is limited compared to edge computing | Highly scalable due to distributed architecture |

| Resource Constraints | Can leverage resources from nearby edge devices | May face resource constraints on edge devices |

| Data Processing | Offers distributed data processing capabilities | Focuses on processing data locally at the edge |

| Use Cases | Suitable for scenarios with distributed sensors | Ideal for applications requiring real-time processing |

Integration of Fog Computing with the Internet of Things (IoT)

With the Internet of Things and IoT devices being richly equipped with sensors that are bound to produce a lot of data, the need for instant processing is obvious for efficiency in operations.

Fog computing is very good at local processing and implements computation and storage as near the network edge as possible. This eliminates the need for unnecessary bandwidth consumption by reducing the need to transfer sensor data back to a distant cloud service.

Therefore, with the latency reduced, fog computing allows IoT devices to react faster with the same real-time analytics and decision-making. This also reduces the load on network infrastructure, thereby improving system performance.

Fog computing extends far beyond data processing to reach the area of device management. Making IoT devices process and analyze data locally promotes independence and efficiency, which again reduces dependency on centralized systems.

In essence, fog computing is a vital bridge between the capabilities of a cloud and the IoT environment. Its distributed nature meets the unique needs of IoT and Industrial IoT, allowing for smooth data processing and analysis while taking performance and resource utilization into account.

Should I Use Fog Computing?

When contemplating integrating fog computing, assess the benefits it offers. Especially if your current cloud infrastructure isn’t meeting your demands. Key factors should drive your decision:

- Reduced Latency: For operations requiring immediate data processing, fog’s lower latency is vital. It places processing nearer to end devices like smartphones, ensuring swift analysis and actions.

- Improve Efficiency: An efficient system is crucial for any enterprise. Fog computing enhances efficiency by analyzing data locally. This minimizes the data sent to central servers.

- Detect Anomalies: For operations that need quick identification of irregularities, fog computing is key. Its distributed nature improves the monitoring of network traffic to identify anomalies swiftly.

- Preserve Network Bandwidth: With less data moving to and from the cloud, network bandwidth is preserved. This is essential for enterprises dealing with vast amounts of data or needing careful bandwidth management.

Conclusion

In conclusion, fog computing is revolutionizing how we handle data by bringing processing power closer to the data source. This paradigm shift reduces latency, enhances efficiency, and improves data privacy, making it ideal for industries like smart cities, healthcare, and transportation.

By decentralizing data processing, fog computing ensures real-time analytics and decision-making, crucial for modern, fast-paced operations.

To leverage fog computing, evaluate its benefits against initial setup costs and security considerations. For applications where speed and local data processing are essential, fog computing offers significant advantages.

Intrigued by the Power of Fog Computing?

Dive deeper at texmg.com! Explore more expert blogs for valuable insights, and don’t forget to discover our cost-effective IT services to optimize your computing infrastructure.

Let’s navigate the fog together!

FAQ

What is the Fog Computing Approach?

Fog computing extends cloud computing to the edge of the network, bringing computing resources closer to data sources and end-users.

What is Fog Computing vs Edge Computing?

Fog computing and edge computing are similar concepts, but fog computing typically involves a hierarchical architecture with multiple layers of processing between the edge and the cloud.

What is the Technology of Fog Computing?

Fog computing utilizes distributed computing resources located at the network edge, such as routers, switches, and gateways, to process data and perform computations closer to where data is generated.

How Does Fog Computing Reduce Latency?

By processing data closer to its source, fog computing reduces the distance data needs to travel, minimizing latency and improving response times for time-sensitive applications.